Can JVM performance in the cloud really compete with bare-metal metrics?

I always like talking to Gil Tene, the CTO of Azul Systems.

Before we jump on the phone or sit down for a talk, his team usually sends me a bunch of boring slides to go through, typically boasting about their forthcoming Zing or Zulu release, along with their latest JVM performance benchmarks. But it’s been my experience that if I can jump in early with technical questions before Tene starts force-feeding me a PowerPoint presentation, I can hijack the call and get some interesting answers to some tough technical questions about Java and JVM performance. The CTO title often infers that you’ll be chatting with a suit without substance, but Tene is a Chief Technical Officer with some real ‘down-in-the-weeds,’ erudite knowledge of how high-performance computing works.

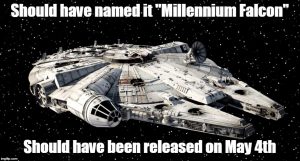

A clever marketing opportunity missed

The reason for our latest talk was Azul System’s 17.3 release of Zing, which includes the LLVM based Just-in-Time compiler. Code-named Falcon, in my estimation, Azul Systems really dropped the ball here in terms of marketing. Instead of calling it Falcon and doing the release on May 2nd, they could have given it the moniker The Millennium Falcon and released it on May the 4th. Now that would have been clever, but sadly, that opportunity has been missed.

Prognosticating the fate of the JVM

Before being subjected to Tene’s PowerPoint presentation, which would invariably extol Zing and Falcon’s latest JVM performance metrics, I figured I’d bear-bate Tene a bit by suggesting that it must be tough working in a dying industry.

Anyone who’s been paying attention to the cloud computing trend knows that everyone is now architecting serverless applications written in either Golang, Node.js, Python or some other hot non-JVM language, eliminating the need for a product like Zing or Zulu. And all of these serverless applications are getting deployed into pre-provisioned containers running in cloud based environments where there’s no longer a need to install a high-performance JVM. After all, nobody’s going out and buying bare-metal servers anymore, so there must be a decline in people purchasing Zing licenses or downloading Zulu JVMs, right? Tene wasn’t biting.

“A lot of what we see today is virtualized, but we do see a bunch of bare-metal, either in latency sensitive environments, or in dedicated throughput environments.”

-Gil Tene, Azul Systems CTO

“Where the hardware comes from, or whether it’s a cloud environment or a public cloud, a private cloud, a hybrid cloud, a data center, or whatever they want to call it, we’ve got a place to sell our high-performance JVM,” said Tene. “And that doesn’t seem to be happening less, that seems to be happening more.”

To be honest, I was hoping Tene would inadvertently slip and reveal some closely guarded secret about how his team had discovered a trick to unlocking infinite performance capabilities by using Zing and the cloud in some weird and unusual way. Conversely, it seems that Zing in the cloud isn’t that much different from Zing in the local data center. “Most of what is run on Amazon today is run as virtual instances running on a public cloud,” said Tene. “And they end up looking like normal servers running Linux on x86 machines, but they run on Amazon. And they do it very efficiently, every elastically and they are very operationally dynamic. Zing and Zulu run just fine in those environments. Whether people consume them on Amazon or Azure or on their own servers, to us it all looks the same.”

Squaring the JVM performance circle

Of course, when I talk to Tene about things like Falcon, Zing and Zulu, it’s JVM performance that tends to be the central theme of the talks, which is a concept that would appear to run counter to the concept of containers and virtualization. To me, that’s a difficult circle to square because on the one hand, you are selling JVM performance, yet on the other hand the deployment model incorporates various layers of performance eating abstraction with Docker, VMWare, hypervisors and all of the other obnoxious layers of indirection that cloud computing entails. After all, if peak JVM performance is the ultimate goal, why not just buy a massive mainframe, or even clustered commodity based hardware, and deploy your LLVM based JIT compiler to some beautiful bare-metal?

“A lot of what we see today is virtualized, but we do see a bunch of bare-metal, either in latency sensitive environments, or in dedicated throughput environments,” said Tene. A hybrid cloud environment leveraging both public and private cloud might use a dedicated, bare-metal machine for their database. Low-latency trading systems and messaging infrastructure are also prime candidates for bare-metal deployments. In these instances, JVM performance is a top priority, and a Zing instance would run on what historians refer to as “a host operating system” without any abstraction or virtualization. “They don’t want to take the hit for what the virtualized infrastructure might do to them. But having said that, we are seeing some really good results in terms of consistency, latency and JVM performance just running on the higher end Amazon instances.”

JVM performance and bare-metal computing

Maybe it’s because my teeth were cut on enterprise systems that weren’t virtualized, but the discussion of Azul’s high performance JVMs running on bare-iron warms my cold heart. It’s good to know that when it comes to JVM performance, there are still places in the world where cold steel trumps the hot topic of containers and cloud-native computing. And I refuse to believe Tene’s assertion that some of the high-end Amazon instances provide JVM performance metrics that approach what can be done with bare-metal. Assertions like that simply don’t fit with my politics, so I refute them.

You can follow Gil Tene on Twitter: @giltene

You can follow Cameron McKenzie too: @cameronmckenzie

Interested in more opinion pieces? Check these out:

- Why the Amazon S3 outage was a Fukushima moment for cloud computing

- Software ethics and why ‘Uber developer’ stains a professional resume

- It was more than user input error that caused the Amazon S3 outage

- Don’t let fear-mongering drive your adoption of Docker and microservices?

- Stop adding web UI frameworks like JSR-371 to the Java EE spec