Understanding the Kubernetes Container Runtime Interface

Standards and convention are an essential part of computer technology. For instance, the HTTP and TCP/IP standards make the Internet possible. The data format standards JSON and YAML fuel agnostic data exchange. MP3 and MP4 drive media streaming.

However, standards and conventions do not appear by magic. They evolve. Typically, companies start out doing things in their own way. Over time, more companies start doing the same thing and they all cooperatively, and selectively, standardize to more easily innovate according to each company’s self-interests. TCP/IP, ethernet, the RS232 jack for wired connections, and the IEEE 802.11x wireless protocol emerged from a hodgepodge of past proprietary networking technologies.

Standards and convention broaden possibilities. They do not limit them.

This is also true with the container orchestration framework Kubernetes. Initially Kubernetes used only Docker to create the containers that ran on the virtual machines in a Kubernetes cluster. Docker was very popular at the time, and arguably pushed container technology into the IT mainstream. Other container engines emerged, but Kubernetes remained tightly bound to Docker. That changed in 2015 with the release of Kubernetes version 1.5, which introduced the Container Runtime Interface (CRI) to decouple the tight binding to Docker so that any container engine can run under Kubernetes.

How does this all work? Let’s take a look at the details and start with the nature of an interface.

Understanding a programming interface

To understand how the CNI works you need to understand what an interface is.

An interface is a programming artifact that describes what a software component is supposed to do, but does not describe how to do it. Think of an interface as a contract between the component and the consumer of the component. Both parties agree to interact according to the definition published by the component.

It’s akin to buying a taco at a taco truck. There is an implicit contract in play. The person taking the order at the window of the truck supports ordering, paying and the handover of the taco. The buyer knows that the order-taker supports ordering, paying and handing over the taco. Both parties participate according to the methods described by the common interface for buying a taco. However, in terms of realizing the taco, that is the private business of whatever happens in the taco truck.

The example below shows an interface, specifically the behavior of ICalculator written in the C# programming language.

using System;

interface ICalculator {

double add(double a, double b);

double subtract(double a, double b

double multiply(double a, double b);

double divide(double a, double b);

} |

Notice that the interface ICalculator publishes the method names, their parameters and expected return type for each method. But, there is no behavior, only a description of the methods of the class.

Now take a look at the next example below. A class named Calculator implements the interface ICalculator, and the methods in the class have actual behavior.

using System;

class Calculator: ICalculator {

public double add(double a, double b); {

return a + b

}

public double subtract(double a, double b); {

return a - b

}

public double multiply(double a, double b); {

return a * b

}

public double divide(double a, double b); {

return a / b

}

} |

In programming parlance we can say that the class Calculator supports the interface ICalculator. It gets really interesting when you mix in a programming principle called programming to the interface.

Programming to the interface means that the developer works only with the interface of a component. The developer does not need to know the details of a component’s implementation, only how it’s supposed to work, hence the interface. The C# code snippet below demonstrates the concept:

using System;

namespace SimpleInterface

{

interface ICalculator

{

double Add(double a, double b);

double Subtract(double a, double b);

double Multiply(double a, double b);

double Divide(double a, double b);

}

// a class that implements the interface, ICalculator

class Calculator : ICalculator

{

public double Add(double a, double b)

{

return a + b;

}

public double Divide(double a, double b)

{

return a - b;

}

public double Multiply(double a, double b)

{

return a * b;

}

public double Subtract(double a, double b)

{

return a / b;

}

}

class Program

{

static void Main(string[] args)

{

// Create a variable named calculator that is an

// instance of the ICalculator interface

ICalculator calculator = new Calculator();

Console.WriteLine(calculator.Add(1, 2));

Console.WriteLine(calculator.Subtract(1, 2));

Console.WriteLine(calculator.Multiply(1, 2));

Console.WriteLine(calculator.Divide(1, 2));

}

}

} |

Notice the statement:

ICalculator calculator = new Calculator();

This statement creates a new instance of the Calculator class, but extracts only the interface that the class supports. Thus, the developer is programming to the interface.

The implication of the programming to interface principle is that a developer writes the same code to any number of software components with predictable results. Like the taco truck example described above, as long as a buyer knows how the purchase-taco interface works, he or she can buy tacos from any taco truck regardless of what goes on inside them.

The role of Kubelet

The implication of the programming to interface principle carries over directly to working container runtimes under Kubernetes. Before we examine that connection, however, we need to review the role of the kubelet component in containers and Kubernetes container orchestration.

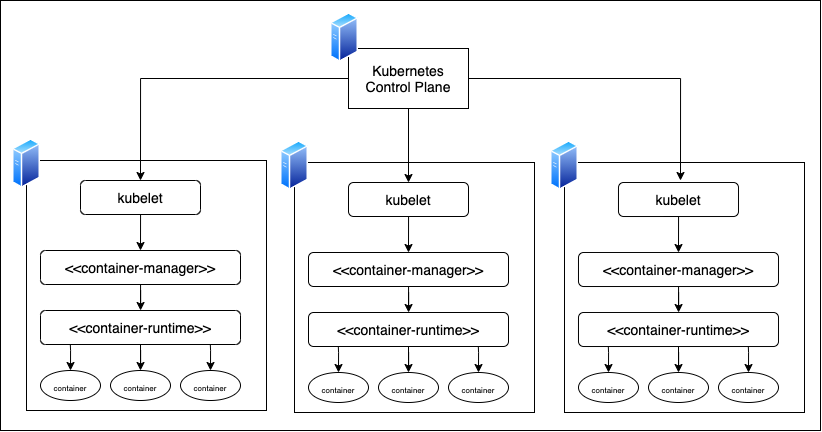

A Kubernetes cluster is separated into two sets of virtual machines: the control plane and the worker nodes. The control plane manages the cluster, while the worker nodes provide the actual logic for a given application. Containers that run on one or many worker nodes execute the application logic.

The component that creates the containers on a worker node is called kubelet. Each worker node in a Kubernetes cluster runs an instance of kubelet.

The control plane determines that containers must be created, and which worker node in the cluster has the capacity to host the given container. It then contacts the kubelet instance that’s running on the identified node and directs the kubelet to create the required containers. Kubelet then works with internal components on the node to create the containers. (See Figure 1, below)

Figure 1: An instance of kubelet running on each worker node in a Kubernetes cluster realizes containers on the node.

As mentioned previously, when Kubernetes first came out, kubelet agents worked directly with Docker to create and run containers. Over time, kubelet required more flexibility so the tight binding between kubelet and Docker needed to be broken. This is where the CRI comes into play.

Where the CRI fits in

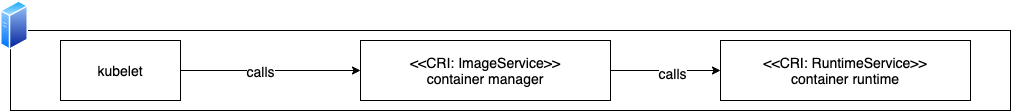

The CRI describes the contract by which kubelet interacts with container realization components to create and run containers on a worker node.

The CRI is divided into two parts: the interface for the ImageService and the the interface for the RuntimeService. These interfaces are written according to the Protocol Buffers (protobuf) specification which gRPC uses as the communication protocol between kubelet and the components implementing the CRI.

The CRI ImageService interface describes the methods to work with container images, which are the abstract templates from which a container is created. Operationally, the component that implements the ImageService interface is called a container manager.

The CRI RuntimeService interface describes the methods for creating and running containers in memory. The component that implements the CRI RuntimeService interface is called the container runtime.

There are many container managers available that support the CRI, such as containerd and CRI-O. Some examples of container runtimes include runc and runsc.

Be advised that the term container runtime is used in different contexts among companies and projects. Sometimes the term describes both the container manager and the container runtime. Other times it refers specifically to the actual container runtime. In this article the term container runtime refers specifically to the container runtime, the component that creates containers in memory, for example runc.

Figure 2 below shows how the kubelet works with the CRI to handle containers.

Figure 2: CRI services describe how containers are to be managed and created by kubelet.

Around the time that the CRI effectively decoupled Docker from Kubernetes, Docker revised its own architecture. Originally Docker shipped the container manager, the container runtime and the CLI tools for developers to interact with the container manager is a single deployment. However, that made Docker bulky, and also violated the fundamental principle of low-level computer architecture: a component should do only one thing.

So, Docker separated out the CLI tool, the container manager (containerd) and the container runtime (runc) into distinct deployment units. Both containerd and runc support the CRI, and both ship by default with Kubernetes.

Putting it all together: Kubernetes and CRI

The CRI gives Kubernetes the flexibility to run a variety of container managers and container runtimes, so companies can use the container manager and container runtime technology that best suits their needs.

Many companies choose to work with Kubernetes’s default container manager, containerd, and container runtime, runc. However, some companies have operational requirements to isolate the container manager and container runtime from the host computer’s kernel. In such a case, Kata-containers with containerd creates each container in a lightweight virtual machine to guarantee a high degree of isolation.

The importance of the CRI is that it makes Kubernetes more flexible. Yet, as is true in many cases, increasing flexibility increases complexity. At the operational level working with the CRI beyond the default containerd/runc configuration requires some mastery of the low-level aspects of Kubernetes, and there is a significant learning curve.

For companies that run complex, mission-critical applications that support millions of users, that steep learning curve is a worthwhile investment. The thing to keep in mind is that the CRI was intended to make Kubernetes more powerful. As the explosion in container manager and container runtime technologies demonstrates, the CRI is accomplishing its goal.