How to setup an Nginx load balancer example

How to configure Nginx as a load balancer

To setup Nginx as a load balancer for backend servers, follow these steps:

- Open the Nginx configuration file with elevated rights

- Define an upstream element and list each node in your backend cluster

- Map a URI to the upstream cluster with a proxy_pass location setting

- Restart the Nginx server to incorporate the config changes

- Verify successful configuration of the Nginx load balancer setup

Which Nginx config file should I edit?

As your Nginx configuration grows, this will require changes to the file in which the upstream element and the proxy_pass location setting must be configured.

For this Nginx load balancer example, we will edit the file named default which is in the /etc/nginx/sites-available folder.

How do I use the Nginx upstream setting?

All of the backend servers that work together in a cluster to support a single application or microservice should be listed together in an Nginx upstream block.

In our example, two localhost servers run on separate ports. Each is listed as a server that is part of the Nginx upstream set named samplecluster:

upstream samplecluster {

server localhost:8080;

server localhost:8090;

}

Is the load balancer also a reverse proxy?

The Nginx load balancer will also act as a reverse proxy.

To do this, Nginx needs to know which URLs it should forward requests to the workload managed cluster. You must configure a location element with an Nginx proxy_pass entry in the default configuration file.

location /sample {

proxy_pass http://samplecluster/sample;

}

With this configuration, all requests to Nginx that include the /sample URL will be forwarded to one of the two application servers listed in the upstream element named samplecluster.

The Nginx load balancer settings will be written to the default file in the sites-available folder.

Complete Nginx load balancer config file

Here is full config file used in this Nginx load balancer example:

### Nginx Load Balancer Example upstream samplecluster { # The upstream elements lists all # the backend servers that take part in # the Nginx load balancer example server localhost:8090; server localhost:8080; } ### Nginx load balancer example runs on port 80 server { listen 80 default_server; listen [::]:80 default_server; root /var/www/html; server_name _; location / { try_files $uri $uri/ =404; } # The proxy_pass setting will also make the # Nginx load balancer a reverse proxy location /sample { proxy_pass http://samplecluster/sample; } } # End of Nginx load balancer and reverse proxy config file

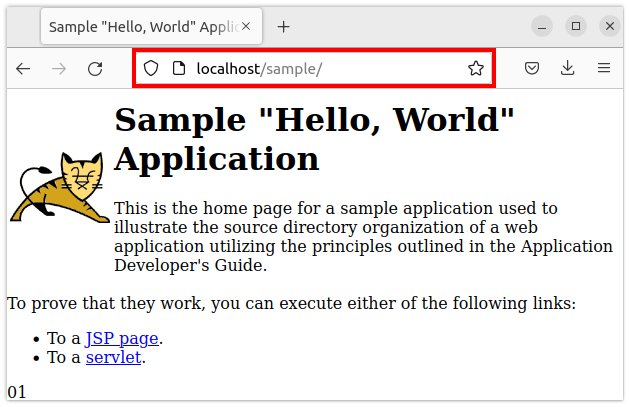

Test Nginx as a reverse proxy load balancer

With the Nginx load balancer configured, restart the server and test the load balancer:

sudo service nginx restart

When the server restarts, Nginx sprays all requests with the /sample URL to the workload managed Apache Tomcat servers on the backend.

A Port 80 request handled by Tomcat proves the Nginx reverse proxy load balancer is configured correctly.

How do you weight to an Nginx load balanced server?

A common configuration to add to the Nginx load balancer is a weighting on individual servers.

If one server is more powerful than another, you should make it handle a larger number of requests. To do this, add a higher weighting to that server.

upstream samplecluster {

server localhost:8090 weight=10;

server localhost:8080 weight=20;

}

In this example, the server running on port 8080 will receive twice as many requests as the server on port 8090 since its weight setting is double its counterpart.

How do you implement stick sessions in Nginx?

Stateful applications rely on the fact that even in a highly clustered environment, the reverse proxy tries to send requests from a given client to the same backend server on each request-response cycle.

Nginx supports sticky sessions if the ip_hash element is added to the upstream cluster configuration:

upstream samplecluster {

ip_hash;

server localhost:8090 weight=10;

server localhost:8080 weight=20;

}

How do you configure Nginx load balancers when a server that is offline?

If one of the upstream servers in an Nginx load balanced environment goes offline, it can be marked as down in the config file.

upstream samplecluster {

ip_hash;

server localhost:8090 weight=10;

server localhost:8080 weight=20;

server localhost:8070 down;

}

If an upstream server is marked as being down, the Nginx load balancer will not forward any requests to that server until the flag is removed.

Cameron McKenzie is an AWS Certified AI Practitioner, Machine Learning Engineer, Solutions Architect and author of many popular books in the software development and Cloud Computing space. His growing YouTube channel training devs in Java, Spring, AI and ML has well over 30,000 subscribers.

Cameron McKenzie is an AWS Certified AI Practitioner, Machine Learning Engineer, Solutions Architect and author of many popular books in the software development and Cloud Computing space. His growing YouTube channel training devs in Java, Spring, AI and ML has well over 30,000 subscribers.